Modern self-driving vehicles rely on multi-camera rigs to see the world, but transforming those streams into 3D understanding is a challenge. Rig3R, Wayve’s latest advance in geometric foundation models, has been designed to power robust ego-motion and 3D structure estimation from embodied camera rigs

Robustly estimating the structure of the world and ego-motion of the vehicle has been a multi-decade pursuit in computer vision. Classical feature-based and photogrammetry methods have long defined the state of the art in multi-view geometry. However, recent advances have shown that large-scale, transformer-based learning can push this frontier even further. Still, these methods fall short in the autonomous vehicle setting, where multiple cameras continuously capture the world through synchronized, structured rigs.

Rig3R is the first learning-based method to explicitly use multi-camera rig constraints for accurate and robust 3D reconstruction. It achieves state-of-the-art performance in complex, real-world driving scenarios.

The solution extends geometric foundation models with the advantage of rig awareness, leveraging rig information when available and inferring rig structure and calibration when it is not. This flexibility is essential for handling the diverse and evolving sensor setups found in embodied AI systems.

How Rig3R works

Rig3R is a machine learning model that takes images from multiple cameras and builds an accurate 3D understanding of the world. A large Vision Transformer (ViT-Large) processes each input image, breaking it into small patch tokens using 2D sine-cosine positional embeddings. Each patch is enriched with a compact metadata tuple: Camera ID, Timestamp and a 6D Raymap, which encodes the camera’s pose relative to the rig. These fields provide spatial and temporal context, helping the model reason across multiple views and time.

A second ViT-Large decoder attends to all images jointly, across views and time, merging visual features, metadata and geometric cues into a shared latent space. During this fusion stage, Rig3R introduces a rig encoder to inject known rig constraints, enabling geometry-aware multi-view reasoning. The model is trained to leverage rig metadata when available but remains robust even when such information is missing. This fused representation forms the core of Rig3R’s multi-view 3D understanding.

Benchmarks and setup

Rig3R is evaluated on two multi-camera driving benchmarks: the Waymo Open validation set and WayveScenes101. Waymo provides lidar-based ground-truth, while WayveScenes101 uses COLMAP reconstructions. In the version presented in the recent paper, Rig3R is trained only on the Waymo training split, making WayveScenes101 an out-of-distribution test that evaluates generalization to unseen camera rigs.

Both datasets use five-camera rigs capturing approximately 200 frames per scene at 10fps. For evaluation, two 24-frame clips per scene are extracted, spaced approximately two seconds apart. Rig3R is benchmarked against feed-forward baselines, classical structure-from-motion and rig-aware methods.

The first objective, pose estimation, is assessed using relative rotation accuracy (RRA) and relative translation accuracy (RTA) at 5° and 15° thresholds, as well as mean average accuracy (mAA) up to 30°. The second objective, 3D pointmap reconstruction, is evaluated using accuracy, completeness and chamfer distance.

Results

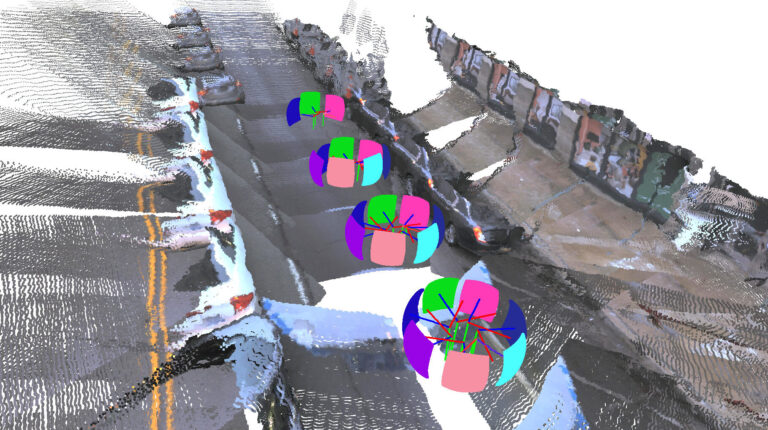

The figure above compares pose estimation and pointmap reconstruction across four methods — Fast3R, DUSt3R-GA, Rig3R (unstructured) and Rig3R (with rig constraints) — on the same driving scene. The progression shows how Rig3R’s rig awareness improves both geometric coherence and reconstruction quality: while baseline methods often produce noisy or spatially inconsistent pointmaps and poses, Rig3R yields sharper, more consistent structures with rig poses that align sensibly across all views.

Why metadata matters

As more metadata is introduced, both pose estimation and pointmap reconstruction improve: predicted camera trajectories progressively align with the physical rig and reconstructed pointmaps become sharper and more consistent. This analysis illustrates how such structured priors, often readily available in embodied and robotic systems, can be effectively integrated into a learned geometric model to enhance both accuracy and generalization.

Rig3R achieves strong pose estimation and dense 3D reconstruction across diverse rig setups and conditions, including changes in baseline, field of view, lighting, speed and weather. It produces stable, low-drift trajectories and metrically consistent pointmaps, even in challenging scenes and in-the-wild driving videos — maintaining performance despite partial or missing metadata.

Many open problems remain in the quest to build a spatially intelligent foundation model. In addition to scaling up training, future improvements to Rig3R could include streaming representations, handling scene motion, multimodal inputs, multiple embodiments and multi-task outputs.

This is an edited version of an article that first appeared on Wayve’s blog on October 15, 2025.

In related news, Aeva introduces open-access FMCW 4D lidar and camera dataset for autonomous vehicle research