From October 21-23, Automotive Testing Expo North America 2025 will gather industry experts from across the ecosystem to help accelerate development cycles for next-gen vehicles, autonomous vehicles, EVs and SDVs, while maintaining quality and compliance. However, according to Venkat Adusumalli, software engineering manager at Stellantis, legacy standards such as Autosar are struggling to keep pace with the complexity of software-defined vehicles, creating bottlenecks in integration and especially in testing.

During the Future of Automotive Testing Conference on October 22, Adusumalli will reveal how Stellantis is using AI to accelerate E/E system testing and validation workflows. ATTI speaks with him to find out more.

Why are legacy standards like Autosar struggling to keep pace with the complexity of software-defined vehicles?

Autosar has been instrumental in standardizing development across diverse teams and suppliers, but its methodology produces a large volume of configuration artifacts. This creates dependencies on multiple toolchains and environments, and the handoffs between teams often lead to rework and delays. In practice, much engineering effort is consumed in producing compliance evidence rather than in finding and fixing defects early. As SDV complexity grows, these inefficiencies become more pronounced, turning testing into a major bottleneck.

How is AI accelerating E/E system testing and validation workflows?

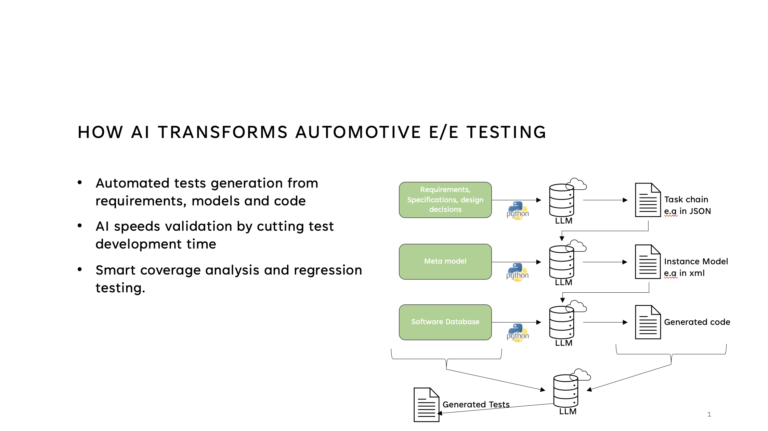

Large language models (LLMs) and AI-assisted tools can transform structured requirements, Autosar XML files (ARXMLs) and even code stubs directly into executable test cases. AI also enables prioritization of high-impact scenarios: the recurring problem areas that consume significant validation effort. By automatically generating tests that maximize coverage in these scenarios, AI accelerates validation cycles and allows engineering teams to focus on analyzing results rather than writing scripts.

What are some of the ways to automate test-case generation and regression testing?

Automation can be applied at several layers of the testing stack. In ARXML validation, generated Autosar XML configurations can be testing automatically to ensure correctness. In unit and interface testing, tests can be automated at the ECU level, including communication and interface validation. In coverage testing, there can be automation of safety-critical metrics such as modified condition/decision coverage (MC/DC), which is required under ISO 26262. And in requirements-based testing, structured requirements can be mapped directly into executable tests for HIL or SIL environments.

What practical steps can companies take to adopt AI-powered testing in automotive projects?

Identify pain points such as repetitive failures or time-consuming test design activities. Pilot AI on use cases, such as ARXML validation or regression subset selection. Integrate with existing frameworks so teams don’t have to abandon established toolchains. Ensure compliance and traceability to meet standards like ISO 26262, ASPICE and tool qualification requirements. Iterate and refine – the quality of generated code, ARXMLs and test scripts improves as feedback loops mature.

Are there any real-world examples of improved testing speed and coverage that you can share?

In our pilot work with ARXML generation and testing, we saw test cycle times drop from weeks to just a few hours. Perhaps even more impactful, the OEM-supplier rework loops in ARXML handovers were reduced from roughly seven cycles down to two in some cases, simply by regenerating correct ARXML configurations from structured requirements and a meta-model. This not only improved speed but also reduced friction across the supply chain.

What are some of the challenges that SDVs pose for conventional testing and validation, and how can they be solved?

The key challenge we face is integration. Testing must ensure seamless interaction of various software systems running in different environments. Another challenge is scale, where validating distributed architectures makes full coverage extremely difficult. Complying with standards like functional safety and ASPICE in every iteration of software release is also a challenge. Some proposed solutions are to use virtual environments for validation that can provide high test coverage, and the use of AI/ML for test generation to speed up testing. Another option is having continuous validation pipelines to ensure regression testing on every iteration of software release.

Why did Stellantis choose to speak on this topic at The Future of Automotive Testing Conference?

It addresses a top pain point for OEM/Tier 1s. We have measured wins on piloted use cases. We want to catalyze an industry move toward AI-accelerated validation, and hope to meet Tier 1s or startups working in AI-accelerated testing, test analytics and traceability.

Hear from Adusumalli at 11:30am during the Future of Automotive Testing Conference on October 22. Visit the Automotive Testing Expo North America website to find out more about this year’s event and to secure your attendance.